The hard thing about Marketing Mix Models

Marketing measurement has been a cat-and-mouse game in the last few years. As new tracking methods emerge, technical limitations and regulations follow, forcing marketers to adapt and innovate. The perfect example is cookie consent in Europe, where traditional user tracking - the foundation for classic Marketing Attribution - has become increasingly restricted and some brands track less than 50% of visitors.

The Rise of Aggregate Data Models

While this shift in privacy regulations is generally positive for consumers, traditional attribution has become more challenging. As a result, we're seeing a significant rise in alternative methods that don't require user-level tracking:

Marketing Mix Modeling (MMM) and incrementality testing have gained popularity as powerful alternatives. Both rely on aggregated data rather than individual user tracking. Interestingly, AdTech giants took notice, because it’s generally in their best interest to showcase that their platform delivers value: Meta has launched Robyn, and Google has introduced Meridian, both open-source packages designed to democratize MMM.

Just to be clear, because there have been some false claims about it:

Since you bring on your own data and the code of these models is publicly available, neither Robyn nor Meridian “correct their own homework” and prefer Meta or Google channels respectively. And in case you’re still paranoid about it, just label your channels “A”, “B”, “C”.

There's also PyMC Marketing, an independent player in this space.

The exciting part? These tools are making sophisticated marketing measurements accessible to everyone. Each of them brings some unique methodologies and innovations into the mix and fosters discussion and development. Eventually, this will lead to even better models as competition and discussions continuously raise the bar.

You don't need to be a Python expert or statistics guru to get started. In theory, a simple ".fit().predict()"-workflow makes it relatively straightforward to obtain results with a few dozen lines of code copied from their demo notebooks.

So where’s the hard part?

A few years ago a common sentiment was that data collection would be the hard part, but it's become surprisingly manageable:

Getting spend, clicks, and impressions automatically for your online channels has become a commodity, with platforms like Supermetrics, Funnel, Fivetran or Airbyte

Offline channels like TV and out-of-home advertising require more manual work but are generally manageable

The main complexity comes from accounting for external factors like seasonality, pricing effects, and market influences

What’s the real challenge then?

Validation and figuring out if your model is correct.

Statistical methods exist to evaluate prediction models (comparing predicted values against actuals). You can precisely assess how good your CLV- or Churn-Prediction is, but MMM presents a unique challenge:

In theory, you build a model to predict revenue or new customers given certain input factors (e.g., marketing impressions, or spending). However, it's not just about predicting overall outcomes—it's about accurately explaining the contributions of the different input factors.

For instance, if you spend $50,000 on Google Ads, $30,000 on Meta, and $20,000 on TikTok, predicting total sales is just the means to an end. The crucial question is: how much did each channel contribute? This breakdown is the entire point of MMM, and it's notoriously difficult to validate.

The Solution: Lift Tests and Experiments

Lift tests and experiments have emerged as the primary solution for model validation. While they've faced some criticism, they've established themselves as the gold standard for cross-checking MMM’s and delivering results about the true incrementality of marketing activities.

What’s the general idea of an Incrementally-Test?

You turn a certain activity on or off and keep everything else the same.

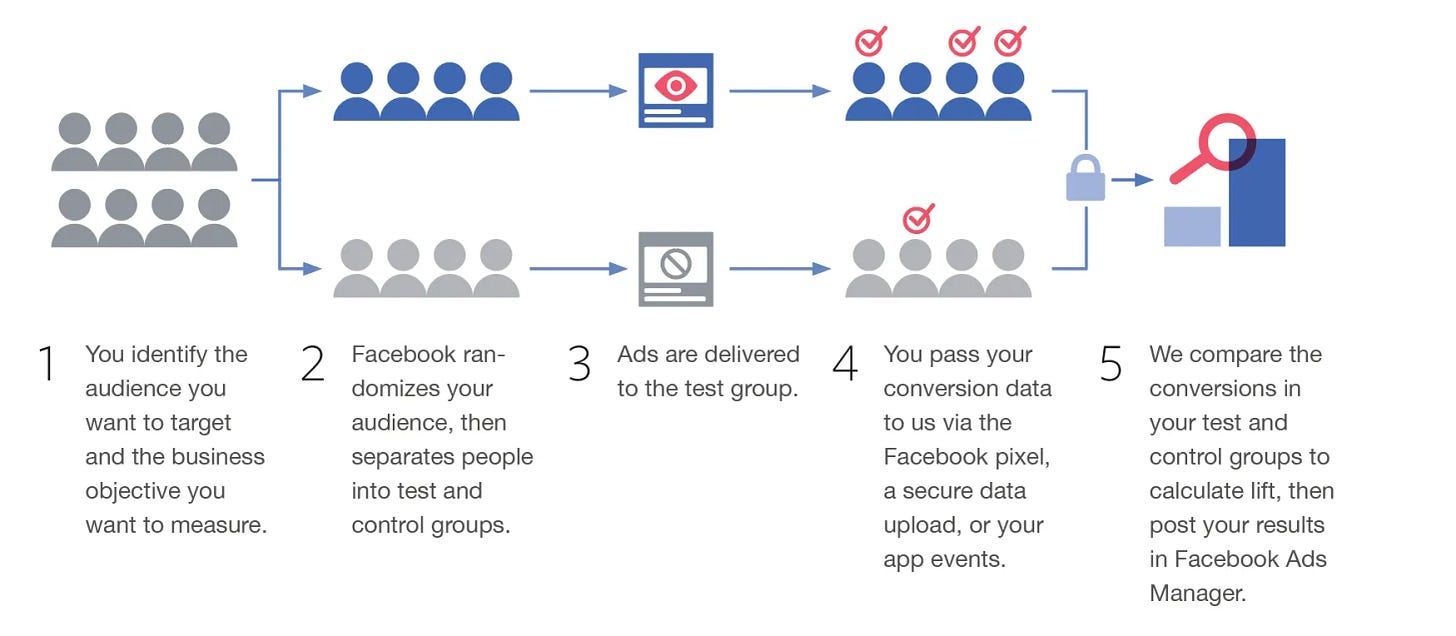

This can happen on a user level for example in Online Ads or CRM, where a certain portion of the target audience isn’t exposed to the ad. Meta and Google both have pre-built solutions that are integrated into their Ads Manager.

For other channels, where it’s not possible to exclude users, you could e.g. run a Geo Experiment. Certain cities, states, or even countries that behave similarly are compared against each other when activities are modified in a selection of them.

Since you usually already have specific channels (or campaigns) - of which you know the performance - running, this means that you are forced to significantly reduce the spending while keeping others identical. If this channel is performing well, you should then observe a reduction in sales, otherwise the channel did not deliver incremental effects. To correct for e.g. seasonality you need the mentioned control groups.

When these methods disagree and the test is well set up and executed, the experimental results typically trump the model, indicating a need for model calibration.

I would even go further and say:

If you cannot run experiments to validate your MMM, you should not try to build one in the first place.

The reasons for not being able to run them can be manifold:

lack of expertise (on setup or evaluation)

you cannot sacrifice “missed” revenue when turning off a channel

sales or spending is too small to cause statistically significant effects

constraints in agreements with advertising partners or clients

company internal politics / being “afraid” of the truth

…

So you could argue:

Try to run incrementality tests and gather learnings BEFORE allocating bigger resources to hunt the “holy grail” of MMM. Since the test results can eventually be used in the modeling process for the MMM, it can be considered a quick-win.

Getting Started with MMM

The barrier to entry for marketing mix modeling has never been lower. You can:

Use open-source frameworks

Opt for Software-as-a-Service providers

Work with agencies or consultancies for custom solutions

However, regardless of your approach, in-house expertise is crucial. This doesn't mean you need data scientists – you need people who understand:

Marketing incrementality concepts

How your different marketing channels might influence each other

Your business context and what data is available

How to design and interpret experiments

(Potentially) How to collaborate with vendors to optimize model features

These individuals will ensure your model accurately reflects your business reality and delivers actionable insights.

Lastly, you need executive buy-in or an environment and culture that allow you to act on the results of any test or model. You’ll need to develop and establish periodic routines in which you review the model, derive new tests, and re-allocate the budget. If these tests get stuck in political discussions and the budget is eventually still allocated based on gut feeling you don’t need to invest all resources into MMM.

Looking Ahead

The evolution of marketing measurement shows no signs of slowing down.

As privacy regulations tighten, platforms transform their ecosystems into black boxes, and traditional tracking methods become less reliable, the importance of aggregate-level measurement will only grow. The good news is that the tools and methodologies are becoming more sophisticated and accessible every month. Too often measurement projects don’t fail because of data issues, but wrong expectations.

What's your experience with marketing mix modeling? Have you tried any of the open-source solutions? How challenging was the validation & implementation? Share your thoughts in the comments below!