Debugging Data Pipelines and Metrics: A Practical Guide

A systematic approach to identify the issue

Debugging data pipelines and metrics can be a nightmare, but with the right approach, it becomes manageable. Here’s what has worked best for me over the years:

Access, Layers and Lineage

To effectively debug, you need access to different systems:

the dashboard

data transformations

and ideally, the source system.

In smaller organizations, this might be feasible for a single person, but in larger enterprises, you’ll need to collaborate with others who have access to these systems.

When stakeholders are reluctant to grant you appropriate access, make sure to communicate how crucial it is for Quality Assurance and potentially loop in downstream consumers of data (Management) in order to get the necessary leverage.

Start from the End

Errors are usually detected at the final stages of data transformations or in the BI tool. Starting from the point where the issue is detected and work yourself backwards towards the source. Modern BI tools that query the data warehouse directly are superior because they allow you to see the SQL statements triggered by chart interactions. This makes it easier to identify missing filters or incorrect transformations.

(That’s one of the reasons I dislike more mature BI tools like Power BI or Tableau. Yes, you can use them in DirectQuery mode, but it’s often considered an Antipattern for performance, as they have their own engines to optimize for.)

Checklist for debugging Reports

I’m assuming there is actually an issue going on and it’s not just a misplaced or forgotten filter in the dashboard itself causing confusion:

Check the used Table: Ensure the correct table is used. It might sound basic, but sometimes development tables are still plugged in or you refer to another related table, which has similar but not identical logic applied.

Review Filters: Check what filters are applied to your query. If you can’t see the SQL query, go through each chart and page to identify any filters. I am generally not a fan of chart- and page-level filters as they make this debugging a nightmare, but sometimes you cannot avoid them.

A reason to keep your BI tools as dumb as possible.Replicate the SQL Query: Compare the SQL query with your expectations. Or even when you cannot access the SQL query created by the BI tool, composing a query from scratch based on your expected behaviours can help identify discrepancies: If your SQL query’s metric is higher than the report, some additional filter is in there, and vice-versa …

Trace a concrete example upstream

If the issue is at the source, you saved yourself some time and now need to decide:

Is some logic applied within the source which is not shown in the raw data?

Are some records filtered out during the ingestion into the Data Warehouse?

Does the data at the source perhaps need to be corrected?

It’s always helpful to have access to the source systems for debugging and data modelling. But you might need to ask a colleague more familiar with the system to answer these questions.

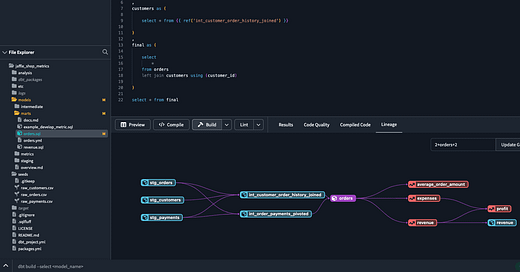

If the issue appears to be within the transformations, trace a concrete example through the different transformation layers with the help of table- or column-level lineage.

Fail Fast: After replicating the BI Tool logic with your mart-tables, jump straight to your clean-layer to determine if the issue is at source or in the data transformations.

This could be a user ID, a transaction ID, or a combination of filters like a specific date, product, and country.

-- select ... from {{ ref("mart_customer_payments") }}

-- where customer_id = 'abc'

select ... from {{ ref("entity_customer_payments") }}

where customer_id = 'abc'

-- move yourself through the different stages of the same "entity"

...

select ... from {{ ref("clean_stripe_customer_payments") }} as clean

left join {{ ref("prep_customer") }} as prep

on clean.stripe_customer_id = prep.stripe_customer_id

where prep.customer_id = 'abc'That’s why it’s always helpful to have a clean-layer WITHOUT heavy logic, just aligning namings and making e.g. dates usable (parsing them from text into a DATE-type from a different locale). If you already apply logic directly in the clean-step you need to spend more time debugging to figure out if the issue is in the transformations or already present at the source.

Modular CTEs

Modular CTEs, popularised by dbt, are very helpful for debugging.

They make the SQL code more readable and easier to understand.

By using small CTEs, named like functions or operations happening inside of them, you can switch between them to isolate the issue:

with flatten_tables as (...),

assign_payment_status as (...),

final_cte as (....)

select * from flatten_tables --assign_payment_status --final_cte

where customer_id = 'abc'

This way, you don’t need to modify a lot of SQL code to jump from CTE to CTE.

Benefits of Modular CTEs

Firstly, Debugging: Modular CTEs help in isolating one transformation at a time by switching between different CTEs.

Secondly, Readability:

They enhance the readability of SQL code, making it easier to understand.

Also, by naming them like the operations with happen in them “filter_…”, “aggregate_…”, …) you can see at a glance which CTE might be relevant for your debugging.

In case you’re worrying that some SELECT * at the start or finish let your cloud bill explode: In my experience, modern cloud data warehouses are clever enough to figure out which columns are actually needed.

Personally, I also skip the “import-CTE” dbt suggests and start with actual transformations already. However, the “final-CTE” is a must to accelerate the debugging experience as described earlier.

Conclusion

In practice and especially with big complexity and organizations, it’s rare that a single person (maybe even a whole team) understands things end-to-end.

Debugging data pipelines and metrics will always remain a grind and require detective work through the different layers.

Modern technologies, namely lean BI tools with Direct Queries to the DWH as well as dbt for your lineage, make it easier than before.

I hope that by following these tips and using a systematic approach, you can identify and resolve issues more efficiently in the future! :)

Let me know what additional methods you use to debug broken metrics in your BI pipelines!